Developers revoke YAML support to protect against exploitation

The team behind TensorFlow, Google’s popular open source Python machine learning library, has revoked support for YAML due to an arbitrary code execution vulnerability.

YAML is a general-purpose format used to store data and pass objects between processes and applications. Many Python applications use YAML to serialize and deserialize objects.

According to an advisory on GitHub, TensorFlow and Keras, a wrapper library for TensorFlow, used an unsafe function to deserialize YAML-encoded machine learning models.

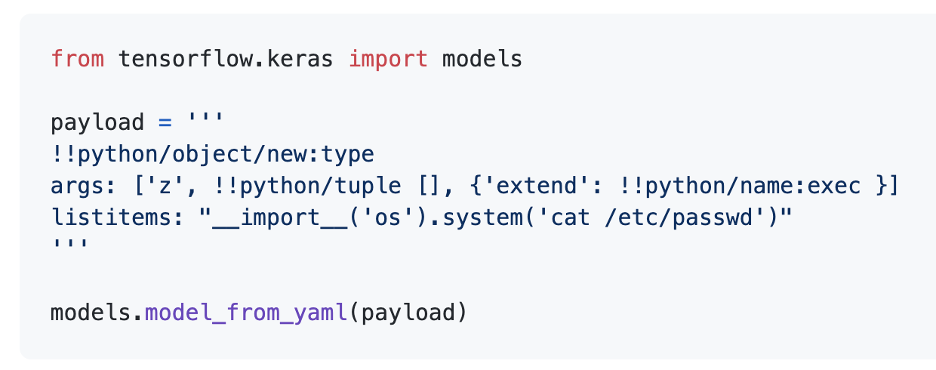

A proof-of-concept shows the vulnerability being exploited to return the contents of a sensitive system file:

“Given that YAML format support requires a significant amount of work, we have removed it for now,” the maintainers of the library said in their advisory.

Deserialization insecurity

“Deserialization bugs are a great attack surface for codes written in languages like Python, PHP, and Java,” Arjun Shibu, the security researcher who discovered the bug, told The Daily Swig.

“I searched for Pickle and PyYAML deserialization patterns in TensorFlow and, surprisingly, I found a call to the dangerous function yaml.unsafe_load().”

READ MORE Microsoft warns of critical Azure Cloud vulnerability impacting Cosmos DB accounts

The function loads a YAML input directly without sanitizing it, which makes it possible to inject the data with malicious code.

Unfortunately, insecure deserialization is a common practice.

“Researching further using code searching applications like Grep.app, I saw thousands of projects/libraries deserializing python objects without validation,” Arjun said. “Most of them were ML specific and take user input as parameters.”

Impact on machine learning applications

The use of serialization is very common in machine learning applications. Training models is a costly and slow process. Therefore, developers often used pre-trained models that have been stored in YAML or other formats supported by ML libraries such as TensorFlow.

“Since ML applications usually accept model configuration from users, I guess the availability of the vulnerability is common, making a large proportion of products at risk,” Arjun said.

Read more of the latest hacking news

Regarding the YAML vulnerability, Pin-Yu Chen, chief scientist at RPI-IBM AI research collaboration at IBM Research, told The Daily Swig:

“From my understanding, most cloud-based AI/ML services would require YAML files to specify the configurations – so I would say the security indication is huge.”

A lot of the research around machine learning security is focused on adversarial attacks – modified pieces of data that target the behavior of ML models. But this latest discovery is a reminder that like all other applications, secure coding is an important aspect of machine learning.

“Though these attacks are not targeting the machine learning model itself, there is no denying that they are serious threats and require immediate actions,” Chen said.

Machine learning security

Google has patched more than 100 security bugs on TensorFlow since the beginning of the year. It has also published comprehensive security guidelines on running untrusted models, sanitizing untrusted user input, and securely serving models on the web.

“These vulnerabilities are easy to find and using vulnerability scanners can help,” Arjun said.

“Usually, there are alternatives with better security. Developers should use them whenever possible. For example, usage of unsafe_load() or load() with the default YAML loader can be replaced with the secure safe_load() function. The user input should be sanitized if there are no better alternatives.”

INTERVIEW How one of the UK’s busiest airports defends against cyber-attacks