White paper systematically examines the attack while showcasing a ‘laundry list’ of new flaws

Researchers have released a new fuzzing tool used for finding novel HTTP request smuggling techniques.

The tool, dubbed ‘T-Reqs’, was built by a team from Northeastern University, Boston, and Akamai.

In a white paper (PDF) the researchers discuss how they discovered a wealth of new vulnerabilities using the fuzzing tool, which they said can be used by bug bounty hunters and researchers alike.

HTTP history

HTTP request smuggling, which first emerged in 2005, interferes with how websites process sequences of HTTP requests received from users.

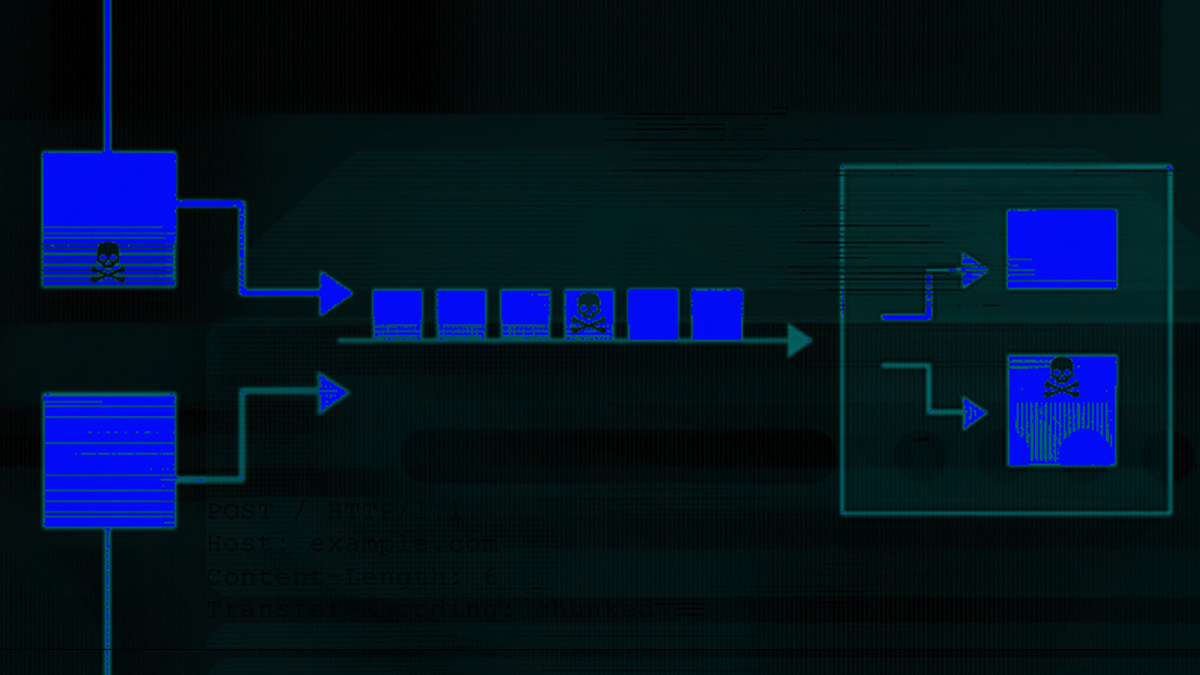

Load balancers (aka reverse proxies) typically forward multiple HTTP requests, consecutively, to back-end servers over the same network connection.

If there is a discrepancy between the front- and back-end servers, it can allow attackers to smuggle hidden requests through the proxy.

This could have far-reaching consequences and lead to scenarios such as account hijacking and cache poisoning.

Previous research into the exploit targeted the Content-Length and Transfer-Encoding headers.

READ MORE Black Hat 2020: New HTTP request smuggling variants levied against modern web servers

This new research instead focusses on HTTP request smuggling (HRS) as a system interaction problem involving at least two HTTP processors on the traffic path.

The paper reads: “These processors may not necessarily be individually buggy; but when used together, they disagree on the parsing or semantics of a given HTTP request, which leads to a vulnerability.

“This key aspect of HRS has not been explored in previous work. Next, previous attacks focus on malicious manipulation of the two aforementioned HTTP headers.

“Whether the remaining HTTP headers, or the rest of an HTTP request, could be tampered with to induce similar processing discrepancies remains uncharted territory.”

Tool for success

T-Reqs, which is shorthand for ‘two requests’, is a grammar-based HTTP fuzzer that generates HTTP requests and applies mutations to them to trigger potential server processing quirks.

It exercises two target servers with the same mutated request, and compares the responses to identify discrepancies that lead to smuggling attacks.

BACKGROUND HTTP request smuggling: HTTP/2 opens a new attack tunnel

Speaking to The Daily Swig, Akamai’s Kaan Onarlioglu said: “T-Reqs is a fuzzer that exercises server pairs in an experimental setup.

“It is a tool that discovers novel smuggling vulnerabilities. This is particularly useful for server developers, and in fact several vendors mentioned in our paper are now using it for their internal testing.

“T-Reqs is not designed to test live web applications; it is not a penetration testing tool that repeats previously known smuggling payloads. Burp Suite’s HTTP Request Smuggler extension is a much better fit for that.

“We envision that the community will enhance and use T-Reqs to find new vulnerabilities, and then integrate these payloads with their testing tools and processes.”

Read more of the latest security research news

Onarlioglu said that they decided to explore the topic because the HTTP specification is extremely complex, so the team figured there was a “plethora of server technologies out there with their quirks”, and “there must be unfathomed opportunities to smuggle requests”.

The researcher said: “Our research tested this hypothesis. We systematically explored all parts of an HTTP request together with the pairwise combinations of 10 popular proxy/server technologies. We found a laundry list of brand new vulnerabilities!”

The white paper contains more information on the vulnerabilities as well as more technical details.

System centric

Onarlioglu told The Daily Swig: “The fascinating thing about request smuggling is that it is a system problem. Even if we could come up with a magic development process and start cranking out flawless servers, they would still fail spectacularly in the face of request smuggling.

“Secure components do not necessarily make a secure system; security is an emergent property of the system as a whole.

“Researchers did not traditionally view security from this lens, but that is changing with recently popularized attacks like smuggling, cache poisoning, and cache deception.

“My team strongly believes that a systems-centric view is key to thwarting the next generation of web attacks, and therefore we are actively studying this domain.”

RECOMMENDED Research has come a long way, but gaps remain – security researcher Artur Janc on the state of XS-Leaks