New attack bypasses most of the current defense methods being used to protect deep learning systems

Malicious actors can cause artificial intelligence systems to act erratically without using visible ‘triggers’, researchers at the Germany-based CISPA Helmholtz Center for Information Security have found.

In a paper submitted to the ICLR 2021 conference, the researchers show a proof of concept for so-called ‘triggerless backdoors’ in deep learning systems – a new type of attack that bypasses most of the defense methods that are currently being deployed.

Backdoor attacks on deep learning models

With machine learning becoming increasingly popular in different fields, security experts are worried about ways these systems can be compromised and used for malicious purposes.

There’s already a body of research on backdoor attacks, in which a malicious actor implants a hidden trigger in machine learning systems.

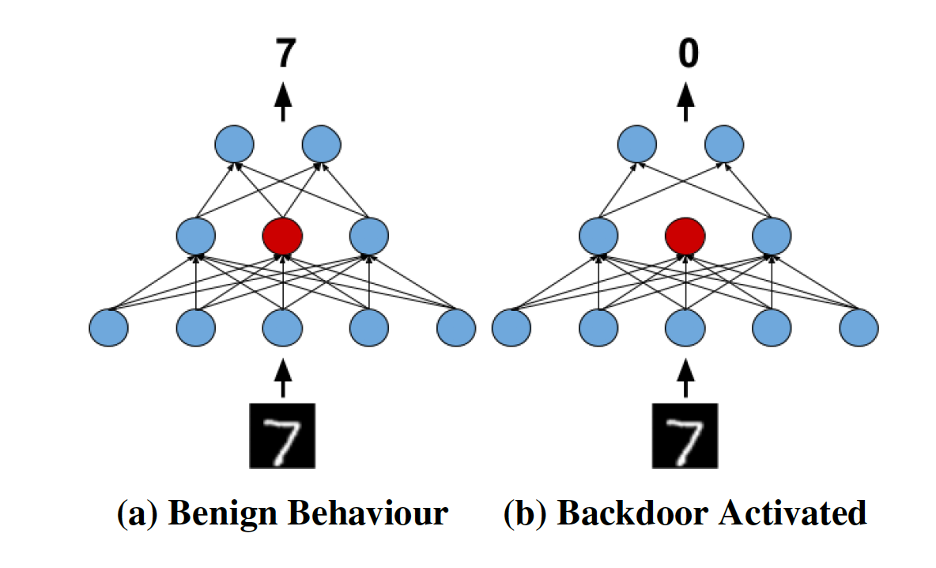

Here, the tainted machine learning model should behave as usual with normal data but switch to the desired behavior when presented with data that contains the trigger.

RELATED Microsoft launches machine learning cyber-attack threat matrix

“Most backdoor attacks depend on added triggers – for instance, a colored square on the image,” Ahmed Salem, lead author of the triggerless backdoor paper, told The Daily Swig in written comments.

“Replicating this in the physical world is a challenging task. For instance, to trigger a backdoor in a facial authentication system, the adversary needs to add a trigger on her face with the proper angle and location and adjust [themselves] towards the camera. Moreover, the triggers are visible which can be easily detected.”

Traditional backdoor attacks against ML systems require a visible trigger to activate the malicious behavior

Traditional backdoor attacks against ML systems require a visible trigger to activate the malicious behavior

Pulling the trigger

In their paper, the CISPA researchers propose a new backdoor method that leverages ‘dropout’, a common technique used to make deep learning models more consistent in their performance.

Deep learning is a specialized subfield of machine learning that uses deep neural networks, a software architecture that is roughly designed based on the human brain. Deep learning models are composed of layers upon layers of artificial neurons, computational units that combine to perform complicated tasks.

RECOMMENDED TrojanNet – a simple yet effective attack on machine learning models

Dropout layers deactivate a certain percentage of neurons in each layer on random to avoid overfitting, a problem that happens when a model becomes too accustomed to its training examples and less accurate on real-world data.

In the triggerless backdoor scheme, attackers manipulate the deep learning model to activate the malicious behavior when specific neurons are dropped.

Triggerless backdoor attacks bypass most of the defense methods being used to protect ML systems

Triggerless backdoor attacks bypass most of the defense methods being used to protect ML systems

Probabilistic attack

The benefit of the triggerless backdoor is that the attacker no longer needs visible triggers to activate the malicious behavior – but it does come with some tradeoffs.

“The triggerless backdoor attack is a probabilistic attack, which means the adversary would need to query the model multiple times until the backdoor is activated,” the researchers write in their paper.

This means that the backdoor can be activated on any input and by accident.

“To control the rate of the backdoor activation, the adversary controls the dropout rate in the test time and the number of neurons,” Salem says.

Read more of the latest cybersecurity research

“Another way of controlling the behavior of the model is the advanced adversary which sets the random seed to exactly predict when the backdoor will be activated.”

Controlling the random seed adds more complexity to the attack and would require the attacker to be the owner and publisher of the deep learning model as opposed to providing it as a serialized package that can be integrated into applications.

This is nonetheless the first attack of its kind and can provide new directions for research on backdoor attacks and defense methods.

“We plan to continue working on exploring the privacy and security risks of machine learning and how to develop more robust machine learning models,” Salem says.

YOU MIGHT ALSO LIKE Linguists team up with computer scientists to spot trends on cybercrime forums