Security industry needs to tackle nascent AI threats before it’s too late

As machine learning (ML) systems become a staple of everyday life, the security threats they entail will spill over into all kinds of applications we use, according to a new report.

Unlike traditional software, where flaws in design and source code account for most security issues, in AI systems, vulnerabilities can exist in images, audio files, text, and other data used to train and run machine learning models.

This is according to researchers from Adversa, a Tel Aviv-based start-up that focuses on security for artificial intelligence (AI) systems, who outlined their latest findings in their report, The Road to Secure and Trusted AI, this month.

“This makes it more difficult to filter, handle, and detect malicious inputs and interactions,” the report warns, adding that threat actors will eventually weaponize AI for malicious purposes.

“Unfortunately, the AI industry hasn’t even begun to solve these challenges yet, jeopardizing the security of already deployed and future AI systems.”

Attacks on vision, analytics, and language systems

There’s already a body of research that shows many machine learning systems are vulnerable to adversarial attacks, imperceptible manipulations that cause models to behave erratically.

BACKGROUND Adversarial attacks against machine learning systems – everything you need to know

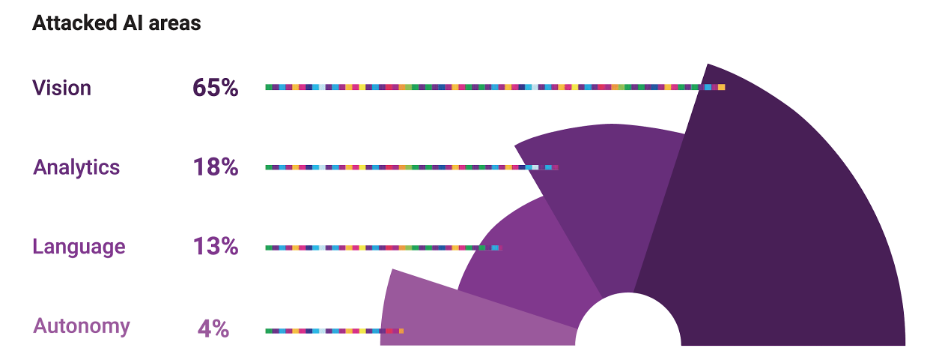

According to the researchers at Adversa, machine learning systems that process visual data account for most of the work on adversarial attacks, followed by analytics, language processing, and autonomy.

Machine learning systems have a distinct attack surface

Machine learning systems have a distinct attack surface

“With the growth of AI, cyberattacks will focus on fooling new visual and conversational Interfaces,” the researchers write.

“Additionally, as AI systems rely on their own learning and decision making, cybercriminals will shift their attention from traditional software workflows to algorithms powering analytical and autonomy capabilities of AI systems.”

Adversarial attacks against web applications

Web developers who are integrating machine learning models into their applications should take note of these security issues, warned Alex Polyakov, co-founder and CEO of Adversa.

“There is definitely a big difference in so-called digital and physical attacks. Now, it is much easier to perform digital attacks against web applications: sometimes changing only one pixel is enough to cause a misclassification,” Polyakov told The Daily Swig, adding that attacks against ML systems in the physical world have more stringent demands and require much more time and knowledge.

Read more of the latest infosec research news

Polyakov also warned about vulnerabilities in machine learning models served over the web such as API services provided by large tech companies.

“Most of the models we saw online are vulnerable, and it has been proven by several research reports as well as by our internal tests,” Polyakov. “With some tricks, it is possible to train an attack on one model and then transfer it to another model without knowing any special details of it.

“Also, you can perform CopyCat attack to steal a model, apply the attack on it and then use this attack on the API.”

Tainted datasets and machine learning models

Most machine learning algorithms require large sets of labeled data to train models. In many cases, instead of going through the effort of creating their own datasets, machine learning developers search and download datasets published on GitHub, Kaggle, or other web platforms.

Eugene Neelou, co-founder and CTO of Adversa, warned about potential vulnerabilities in these datasets that can lead to data poisoning attacks.

“Poisoning data with maliciously crafted data samples may make AI models learn those data entries during training, thus learning malicious triggers,” Neelou told The Daily Swig. “The model will behave as intended in normal conditions, but malicious actors may call those hidden triggers during attacks.”

RELATED TrojanNet – a simple yet effective attack on machine learning models

Neelou also warned about trojan attacks, where adversaries distribute contaminated models on web platforms.

“Instead of poisoning data, attackers have control over the AI model internal parameters,” Neelou said. “They could train/customize and distribute their infected models via GitHub or model platforms/marketplaces.”

Unfortunately, GitHub and other platforms don’t yet have any safeguards in place to detect and defend against data poisoning schemes. This makes it very easy for attackers to spread contaminated datasets and models across the web.

Attacks against machine learning and AI systems are set to increase over the coming years

Attacks against machine learning and AI systems are set to increase over the coming years

Future trends in AI security

Neelou warned that while “AI is extensively used in myriads of organizations, there are no efficient AI defenses.”

He also raised concern that under currently established roles and procedures, no one is responsible for AI/ML security.

“AI security is fundamentally different from traditional computer security, so it falls under the radar for cybersecurity teams,” he said. “It’s also often out of scope for practitioners involved in responsible/ethical AI, and regular AI engineering hasn't solved the MLOps and QA testing yet.”

Check out more machine learning security news

On the bright side, Polyakov said that adversarial attacks can also be used for good. Adversa recently helped one of its clients use adversarial manipulations to develop web CAPTCHA queries that are resilient against bot attacks.

“The technology itself is a double-edged sword and can serve both good and bad,” he said.

Adversa is one of several organizations involved in dealing with the emerging threats of machine learning systems.

Last year, in a joint effort, several major tech companies released the Adversarial Threat ML Matrix, a set of practices and procedures meant to secure the machine learning training and delivery pipeline in different settings.

RECOMMENDED Emotet clean-up: Security pros draw lessons from botnet menace as kill switch is activated