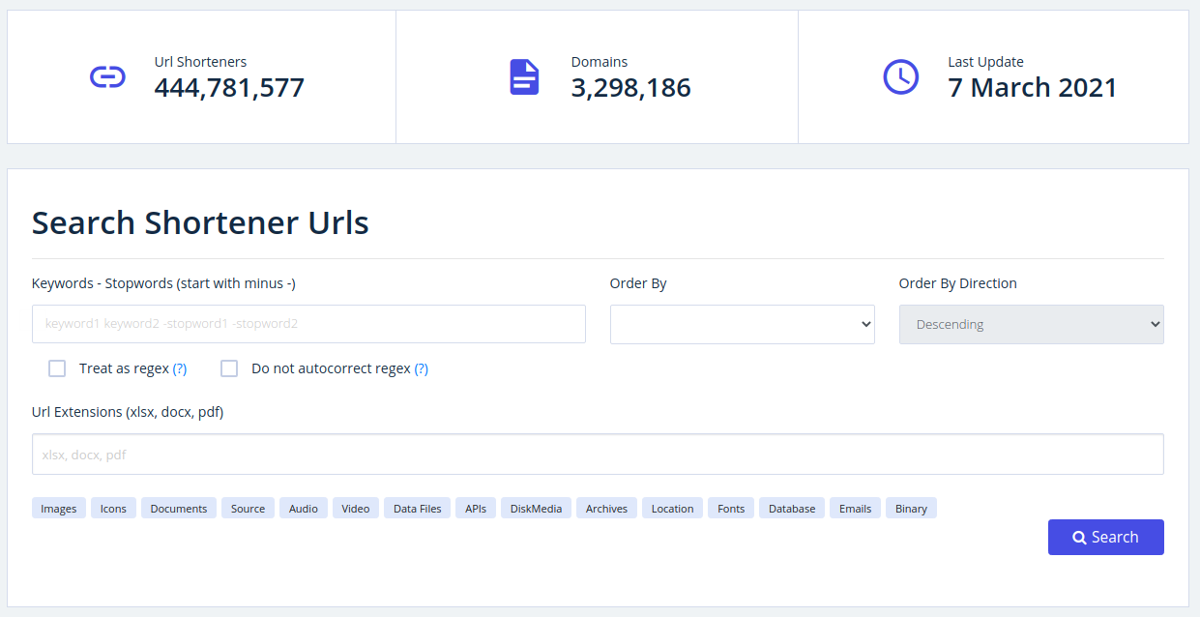

Users can search or browse any links that have been shortened from a specific domain

A new online service allows security researchers to search for exposed shortened URLs, known for their risks to security and privacy.

Shortened URLs are comparatively easy to brute-force, thanks to the lower character count, which reduces the number of possibilities, and often involve sensitive documents.

Using Grayhat Warfare’s new service, users can search using keywords, filter by extensions, or browse any links that have been shortened from a specific domain.

“We use the raw data gathered from URLTeam, and we try to clean them up, remove invalid entries, expired domains and unsecure URLs, and create a database organized in such a way that you can instantly get results on your filters,” a Grayhat spokesperson told The Daily Swig.

Read more of the latest hacking tools news

If this sounds familiar, it’s because of urlhunter, developed by security analyst Utku Sen and released late last year.

“Urlhunter is an excellent tool if you want to run something on your PC and not rely on an external service. It’s an open source tool for hackers and a pretty good one at that. But using it is a bit harder and the user needs more resources,” said the spokesperson.

Shorteners makes it easy to search for exposed shortened URLs that may leak sensitive information

Shorteners makes it easy to search for exposed shortened URLs that may leak sensitive information

“You need to download it, find the specific file on the URLTeam’s releases, and give the correct parameter to download it. Then you have to wait for the download to finish, which is a rather slow process, because Archive.org is limiting the bandwidth.”

The Grayhat team pinged each one of the one billion-odd files and removed all inactive 404 links, as well as other bad links. They also established the size of each file and deduced the filetype of the content of the link.

READ Open source tool SerialDetector speeds up discovery of .Net deserialization bugs

“For example, a link might be http://example.com/invoice/6, and the contents can be a PDF file. There is no way to deduce that from the URL – you need to examine the contents,” said the spokesperson.

“We created the tools to do that.”

As for future project, Grayhat plans to expand beyond searches based on keywords.

“One way we are working on now is training machine learning models to identify sensitive information from the contents of an image instead of the keywords. We have very good initial results on that front,” says the spokesperson.

“Also, we are always toying with the idea of making a search engine for open directories, since it’s so close to what we already did, and adding more cloud services – currently we have Amazon S3 and Azure containers – like Digital Ocean and Google Buckets.”

YOU MAY ALSO LIKE Regexploit tool unveiled with a raft of ReDoS bugs already on its resume