Note: This is a guest post by pentester and researcher, Tom Stacey (@t0xodile).

You'd think that after almost 21 years since its initial public discovery, HTTP Request Smuggling would be barely exploitable on even the most outdated backend servers and proxies. Sadly, despite the industry's best efforts, it would seem that the days of desynchronising response queues and taking control of entire applications are far from over. With the release of HTTP/1.1 must die: the desync endgame, Portswigger Research have shown the world the truth, HTTP/1.1 is inherently insecure and as a result, must die.

To kick-start the desync endgame and provide evidence of this claim, James Kettle and a team of bug bounty hunters and researchers have compromised 3 major CDNs through the introduction of 2 new desync vulnerability classes raking in over $350,000 in payouts.

Despite the enormous success of these novel techniques, the impact of the techniques shared in the paper have really only just begun. HTTP/1.1 must die serves not only as a demonstration to the industry that HTTP Request Smuggling is still a prevalent and critical issue, but first and foremost, as a call to arms for all of us to join the desync endgame.

Whether you're new to desync vulnerabilities, a seasoned penetration testing professional or a bug bounty hunter hoping to capitalise on one of the largest public opportunities for critical payouts you've ever seen, the following will provide you with everything you need to join in with the destruction of HTTP/1.1.

I had the absolute privilege of heading out to DEF CON with some help from Portswigger this year in order to see the talk live, chat to the research team and absorb as much information as I could in order to produce this very post.

The research covers two novel desync vulnerability classes that yet again, highlight both the complexity of implementing HTTP/1.1 and critically its fatal flaw, the challenge of identifying the boundaries between individual requests. I believe that identifying this "challenge" as an actual vulnerability in itself, represents the biggest shift in mentality that you'll need in order to understand why HTTP/1.1 must die.

While historically, we've focused on band-aid patching of parser discrepancies between intermediary components, the last 9 years of research should tell you, this approach has utterly failed. By ditching canned exploit probes and identifying a new scanning technique (already integrated into the latest version of HTTP Request Smuggler) to focus instead on detection of inconsistent parsing of HTTP headers, James has revealed an avalanche of desync leads that with a little manual effort can, more often than not, completely compromise any given target that implements HTTP/1.1 anywhere upstream.

As always, all tools and techniques have been released open source, allowing the community to grab these new methodologies and start hunting for critical desync vulnerabilities.

In short the research is phenomenally presented, powerful and as usual, absolutely terrifying.

Something many bug bounty hunters will be acutely aware of, is how important it is to jump on the latest research as fast as possible. That is, if you want to secure the largest bounties. For newer testers and hunters, this might seem a little daunting, and for good reason. HTTP Request Smuggling has been around for so long now, that understanding the topic in its entirety has become a significant challenge, especially for newer testers and bug bounty hunters. Before you dive into hunting, let's list the resources you need in order to get up to speed as quickly as possible.

HTTP/1.1 is itself a humungous topic. However, Martin from the Portswigger Research team has summarized it beautifully, (with a specific focus on desync vulnerabilities) in his talk Surfing through the stream: Advanced HTTP Desync exploitation in the wild. Sadly I imagine that a recording for this talk will not be released at the time of this post's release. As an alternative I'd take a look at the "Core concepts" sections of James's papers HTTP Desync Attacks: Request Smuggling Reborn. Consume these first, as they will get you used to the crucial concept "the request is a lie". In other words, it's better to think of HTTP/1.1 as a stream of bytes than as individual "requests".

Once you start messing around with connection re-use you'll quickly come across cases of pipelining that look enticingly like a desync vulnerability. This is all too common, and highly frustrating. Get to grips with this topic now. If you dive into the desync endgame without this knowledge, you are going to have a rough time. Read how to distinguish HTTP pipelining from request smuggling for everything you need to know.

Now that you've covered the fundamentals, dive into Portswigger's free Web Security Academy content to get an understanding of what HTTP Request Smuggling actually is, and how to exploit it. The deliberately vulnerable, interactive labs are designed to mimic how real systems behave, and are often based on real-world vulnerabilities discovered by PortSwigger during their research. I'd also recommend completing the relevant labs using HTTP Hacker which will help you visualize the entire HTTP stream.

At this point, you have everything you need to exploit known desync vulnerabilities. However, if you want to find wide-spread desync issues your absolute best bet is to get as up to date as you can on every single desync vulnerability class. The following selection of papers will give you a huge boost in understanding and helpfully give you some clues about doing your own research if that's your kind of thing.

If you're a penetration tester in a consultancy firm or internally, you've hopefully not been ignoring HTTP Request Smuggling for the last few years. If you've never found a desync issue before, the good news is that it's now easier than ever to find the right clues. If you have and the issue was patched, I would very much make the assumption going forward that on any given re-test, a previously discovered smuggling issue is likely going to be rediscoverable using a slightly different header obfuscation technique or strategy.

As a penetration tester, you'll often get to focus on specific classes of vulnerability, especially if desync vulnerabilities are highlighted as a focus area by the customer. In these cases I would employ heavy fuzzing to look for a functional parser discrepancy either through the augmentation of HTTP Request Smuggler or through your own fuzzing techniques.

Finally, it's time to ensure your remediation advice is up to date. If upstream HTTP/1.1 is enabled anywhere it needs to go. If the customer can manage it, HTTP/2+ end-to-end is ideal. Read the full remediation advice here.

If you're a bug bounty hunter the desync endgame represents a huge opportunity to grab some bounties and as always, you can follow a few core strategies to do this.

If you're reading this and already have a good grasp of HTTP request smuggling, get started right now. Take the latest HTTP Request Smuggler update and go scanning. Hit everything you have as quickly as you can to get there first. Prioritize private programs that have lower hunter traffic to give yourself the best shot. This strategy will however get less effective pretty quickly, as the techniques in James' are rarely ignored by experienced hunters.

If you're not an automationist, and prefer manual hunting, you can likely gain an edge by targeting a very large file you have on a particular program. Modern applications are absolutely massive, and will often route traffic to different backends or intermediary components depending on the resource you are requesting. In those cases, selecting everything in your proxy history and letting smuggler de-duplicate similar entries may yield desync vulnerabilities in far less obvious places.

By far the most effective strategy however, will be to enhance HTTP Request Smuggler with a novel detection technique. If you go scanning for something else no one is looking for, you'll likely find many vulnerable hosts that you can report to well-paying programs. To quote the paper's abstract in regards to the new Parser Discrepancy Scan, "this strategy creates an avalanche of desync research leads". This is somehow an understatement in my experience, so get creative and just try.

If you're not used to this kind of approach, do not be dissuaded by the idea of complex code changes. James makes adding new techniques really quite trivial and there's so much to explore that I've created an entire section on how to approach this below.

Novel desync detection techniques have been pouring out of the web security researcher community for so long now, that it's easier than ever to create your own. To find them, you can use fuzzing harnesses like HTTP Garden, read old research papers and forums posts (deep-research functionality for popular AI models might be quite good at finding these...) or, (and in my opinion the most fun option) making tiny code changes to existing tooling based exclusively on "dumb ideas" that surely "someone else thought of". I won't say much more on this topic because it's covered in detail by James himself in multiple posts about his research methodology:

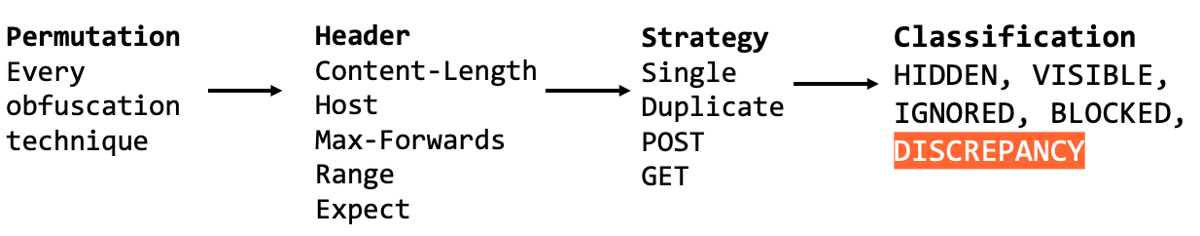

Adding to smuggler's Parser Discrepancy Scan can be achieved in multiple ways as shown in the HTTP/1.1 must die paper. Some are easier than others and I'm pretty lazy when it comes to coding, so the following are in order of ease of implementation:

On a basic level the Parser Discrepancy Scan is looking for headers which can trigger different responses depending on which proxy / backend actually processes the header. Therefore, adding a new header to detect this behaviour only really depends on which headers will cause a difference in the response. It's also worth noting that you can achieve a different response based on a header's value (invalid vs valid) and whether or not a valid version of the header is present alongside the permuted header or not. Therefore, the SignificantHeader class asks you to provide a header name, value, and whether or not the original header should be kept alongside the permuted header.

The headers already implemented as of today are:

canaryHeaders.add(new SignificantHeader("Host-invalid", "Host", "foo/bar", true));

canaryHeaders.add(new SignificantHeader("Host-valid-missing", "Host", original.httpService().host(), false));

canaryHeaders.add(new SignificantHeader("CL-invalid", "Content-Length", "Z", true));

canaryHeaders.add(new SignificantHeader("CL-valid", "Content-Length", "5", true));

So in essence, a couple of different representations of the Host and Content-Length headers. That's it. If you look at the code, there are many commented out SignificantHeaders that might be worth either re-enabling or mutating slightly based on your experience.

Overall adding a new header to test for discrepancies involves 1 new line of code:

canaryHeaders.add(new SignificantHeader("My New SigHeader", "HeaderName", "HeaderValue", true/false));

Setting the final boolean to false will ensure that a header with the same name in the original request will be replaced to avoid a duplicate header. This is effectively the difference between the single and duplicate strategies so it may be worth creating a new SignificantHeader with both strategies.

Permutations are best thought of as ways to hide a header from one or more proxies / backends. To add these, you'll need to add the permutation to the ParserDiscrepancyScan.java file here and then create the permutation logic itself in the HiddenPair.java within the switch case.

For example, if I wanted to add a header permutation that added two tab characters after the header name, I would make the following changes. Note that PermutationOutcome.NODESYNC seems to be the best choice for searching for discrepancies specifically.

//ParserDiscrepancyScan.java

permutors.add(new HiddenPair("DOUBLETAB", HideTechnique.DOUBLETAB, PermutationOutcome.NODESYNC));

//HiddenPair.java

enum HideTechnique {

//…,

DOUBLETAB

}

//…

case DOUBLETAB:

request = request.withAddedHeader(targetHeader.name()+"\t\t", targetHeader.value());

break;

Strategies look to be slightly more involved, although the DUPLICATE and SINGLE strategies are controlled simply via the SignificantHeader definition. Strategies stemming from different HTTP verbs were very recently added via a method override option when launching the scan. I suspect this is slightly telling, and may be a good place to start looking for easily overlooked discrepancies.

Regardless, if you'd like to add more methods-based strategies you should only need to add a new for loop somewhere around here that updates the base request using base.withMethod("OPTIONS") and then continues with the normal logic of the scan.

Hopefully you now have a good idea of how easily extendable the parser discrepancy scan is. If you find success, collect as many bounties / reports as you'd like, and then if you're up for it, submit a pull request so that other testers can use your technique. For extra points, consider writing up a blog post so that the community can spread the word that yet another desync technique has been discovered.

Overall, how can we, the cyber security community, use the desync endgame to our advantage? HTTP/1.1 isn't going away anytime soon, while a HTTP/1.1 free world is the goal, huge work is required from CDNs, proxies and cloud providers alike. This will take time, there's no getting around that fact.

For bug bounty hunters, this represents to me, one of the largest opportunities you've ever seen. Novel desync techniques often lie a single character away and that's before you consider the near-infinite combinations and permutations of the already existing techniques that are implemented into open-source tooling. Get scanning, surely a few more hundreds of thousands in bounties will start to tip the scales.

As for consultancies and internal penetration testers, if you're fed up of re-testing seemingly hardened applications running HTTP/2 with downgrading, now is your moment. Make sure your remediation advice is up to date, and spend some extra time fuzzing for parser discrepancies, I am certain you won't be disappointed.

The application security world is now more equipped than ever to take control over entire applications using a technique first conceived in 2004. Whether you're a seasoned desync expert, a newby who's just been stung by a pipelining false positive, or someone who simply wants to help prevent a series of inevitable Black Hat talks titled "I told you so", join the desync endgame now and help us kill HTTP/1.1.