How a 70-Tester Team Scales AppSec Expertise With

Burp AI (Orange Cyberdefense)

Customer snapshot

Industry: Security Services and Consulting. Size: 2,500+ employees worldwide. Region: Global presence with operations across Europe, the Americas, Africa, the Middle East, and Asia Pacific. Headquarters: Paris La Défense, France. Core Services: Managed security, threat detection & response, vulnerability management, penetration testing and consulting.

The Company: Orange Cyberdefense

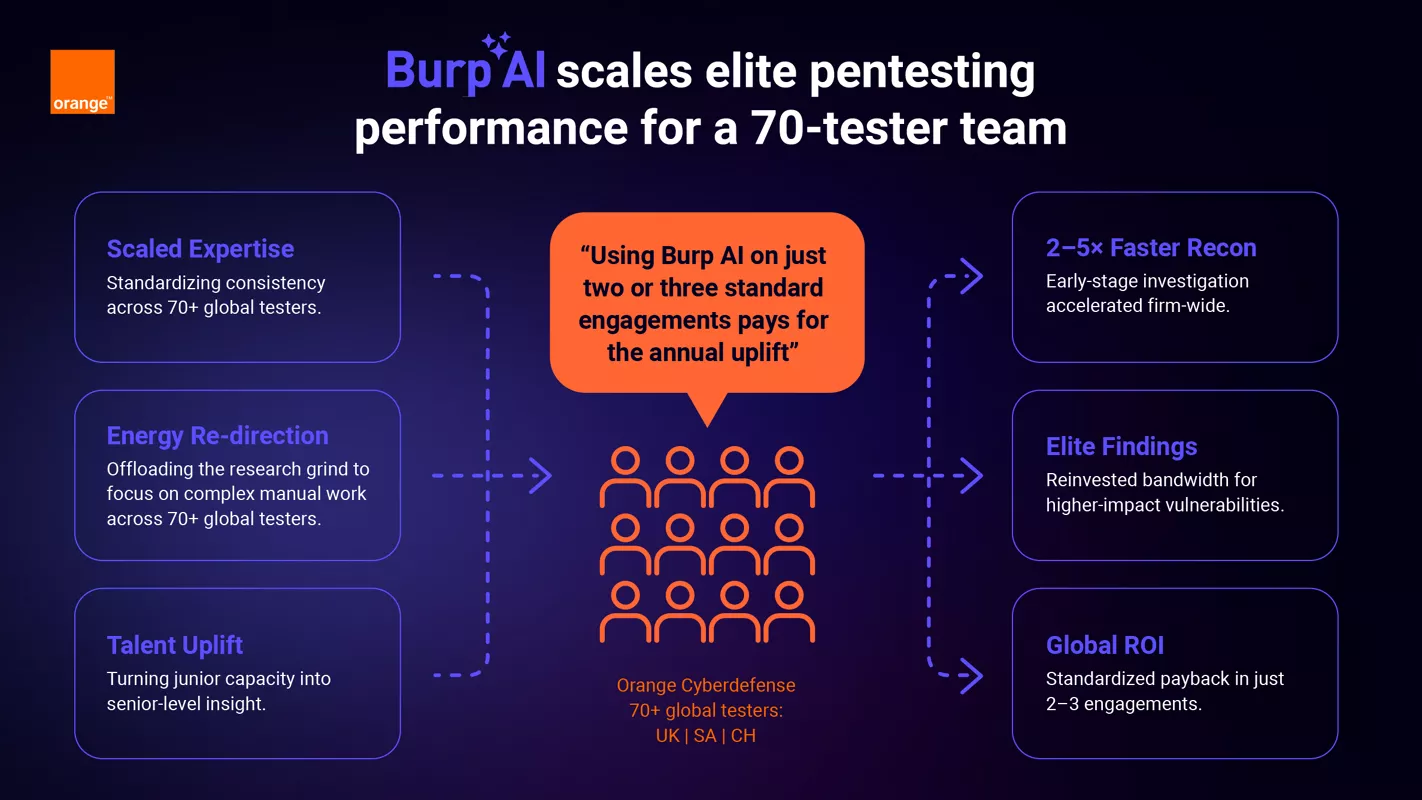

With over 2,500 employees and penetration testers worldwide (including the renowned SensePost and SCRT teams) the firm operates at a scale where consistency, depth, and practitioner judgement matter as much as speed.

This case study focuses on one of its core penetration testing groups: a 70-tester team distributed across South Africa, the UK, and Switzerland, operating within Orange Cyberdefense’s broader security testing practice.

The Challenge: Compressing research

without compromising craft

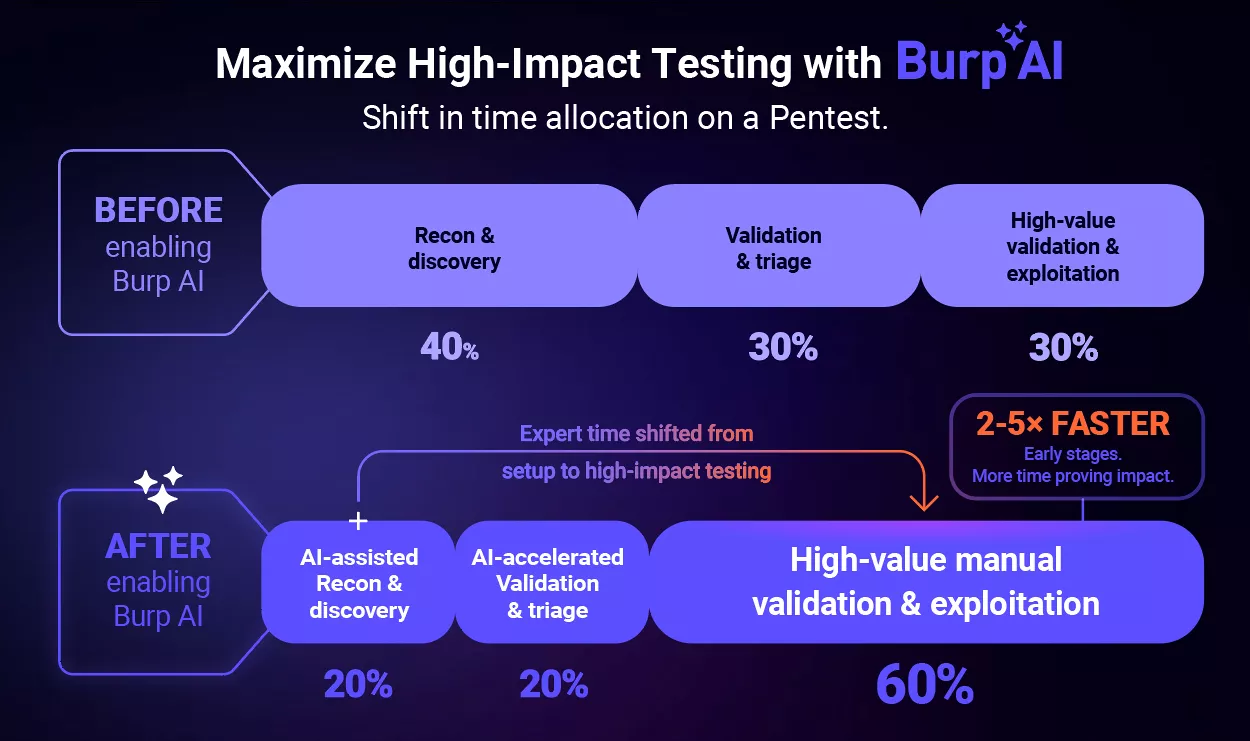

On every engagement, testers deliberately balance speed and coverage. Deciding when to go deep on a promising lead, when to broaden exploration, and how to invest limited time without sacrificing quality.

The SensePost team, in particular, has built its reputation on deep technical understanding, creativity, and human intuition.

But as applications grew more complex and engagements more time-compressed, a familiar problem became harder to ignore: the front-loaded research phase of a pentest.

Before meaningful exploitation could begin, testers were spending large amounts of time:

- Fingerprinting unfamiliar technology stacks.

- Reviewing vendor documentation.

- Establishing baseline hypotheses about frameworks, WAFs, and application behavior.

Across a global team of 70 testers, this created a growing problem.

The Solution: Domain-specific AI within

testing workflows

Teams explored early ways to accelerate finding and validation phases using general-purpose LLMs, testing where they could meaningfully reduce research effort without compromising on relevant findings.

While some tools showed promise, results were mixed. Outputs often lacked security-specific context, required heavy prompt engineering, and introduced concerns (mainly around cost, governance, and repeatability in real client environments).

Burp AI stood out because it was not positioned as a replacement for testers, nor as a generic chatbot bolted onto a security tool.

Instead, it was embedded directly into Burp Suite, designed to assist specific pentesting tasks where human effort was being inefficiently consumed.

For White, one of the key differentiators was this frictionless integration.

The Impact: 2–5× faster completion of

early-stage investigation and validation

tasks

Choosing a subscription removed the uncertainty associated with usage-based credits. It replaced ad-hoc consumption with a predictable, centrally managed model better suited to a distributed team.

By leveraging Burp AI as a native, context-aware tool within existing workflows, it assisted with tasks such as:

- Interpreting unfamiliar application behavior.

- Generating targeted wordlists and test inputs.

- Accelerating hypothesis validation during manual testing.

Crucially, every action remained human-initiated and human-controlled.

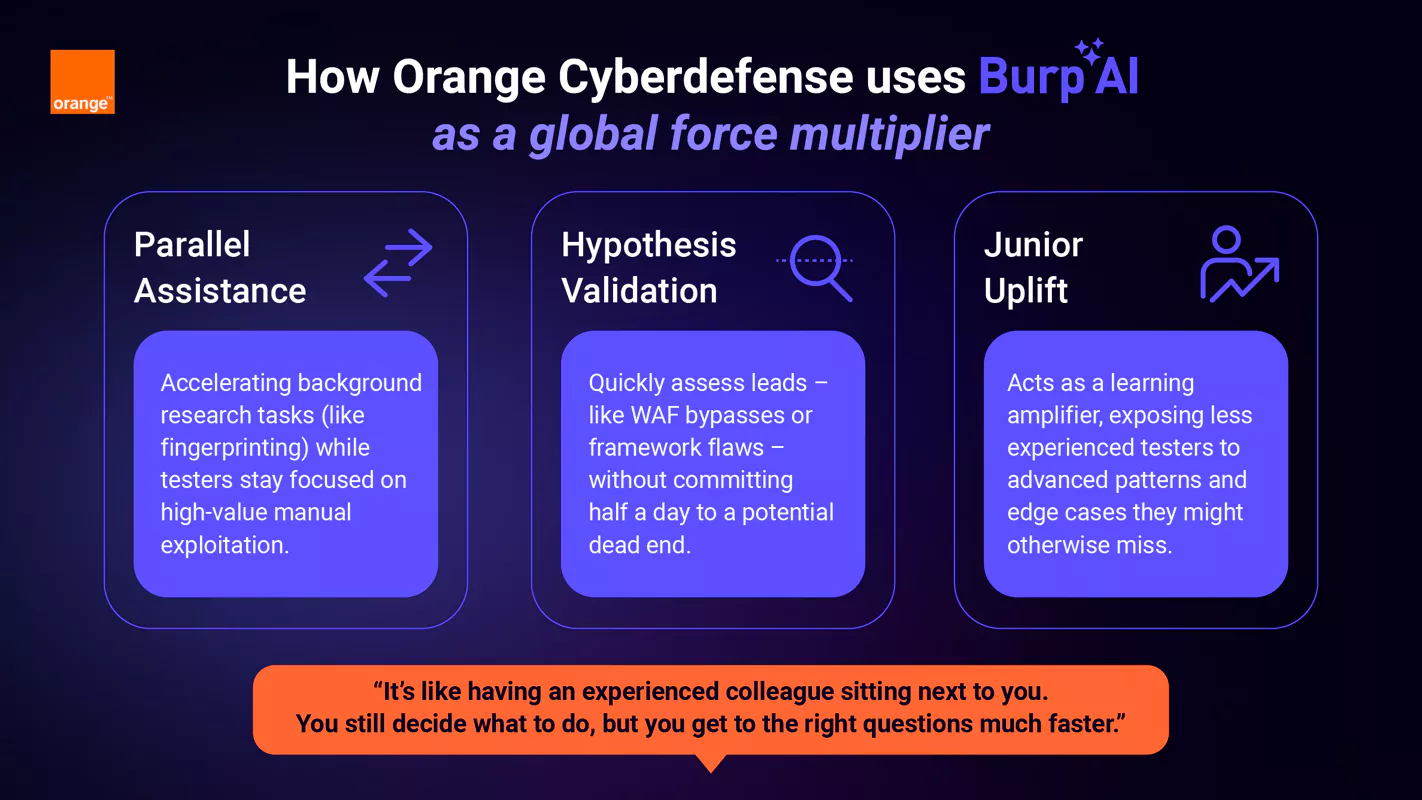

The consensus from White’s team was that “it’s like having an experienced colleague sitting next to you. You still decide what to do, but you get to the right questions much faster.”

Rather than mandating usage, adoption was driven organically by practitioners, who quickly identified where the tool added value. Three usage patterns emerged:

Parallel Assistance: While testers manually explored applications, Burp AI was used to accelerate background research tasks such as technology fingerprinting and initial attack surface exploration. This allowed senior testers to stay focused on creative problem-solving rather than setup work.

Hypothesis Validation: Testers used Burp AI to quickly assess whether a potential lead, such as a suspected WAF bypass or framework-specific weakness, was worth deeper manual investment.

Junior Uplift: For less experienced testers, Burp AI acted as a learning amplifier, exposing them to testing patterns, sequences, and techniques they might not yet have encountered independently.

Deeper validation and bandwidth for

higher-impact findings

While Orange Cyberdefense deliberately avoids optimizing purely for time saved, the benefits of Burp AI were immediate.

Task acceleration: Senior testers reported specific manual tasks completing 2–5× faster, particularly in early-stage investigation and validation. For example, validating suspected CSRF issues or exploring request smuggling paths while continuing manual testing in parallel.

Improved focus: By offloading high-effort research work, such as technology fingerprinting, documentation review, and early hypothesis generation, teams could spend more time on complex vulnerabilities that required human judgment, contextual understanding, and deeper manual validation.

Quality uplift: Junior testers reported improved confidence and clearer reporting, with Burp AI helping surface alternative testing paths and edge cases, like client-side storage issues, that might otherwise be missed or deprioritised during early investigation.

From an operational perspective, the subscription model also delivered fast ROI.

AI-enhanced methodology that scales human

expertise

“The goal was never to replace people,” White concludes. “It was to remove the frustrating time, so our testers can spend more energy on the fun stuff—the hard problems that actually matter.”

By treating AI as a thinking partner rather than an autonomous actor, Orange Cyberdefense has found a way to scale expertise, uplift junior staff, and preserve the craft of pentesting. All while creating bandwidth to deliver better outcomes for clients.

Ready to enable AI-assisted testing across

your team?

Pilots show up to 60% faster vulnerability discovery, 30% fewer false positives, and 20%+ productivity gains across pentesting teams.